NUMA architectures logically follow in scaling from symmetric multiprocessing (SMP) architectures. The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). Non-uniform memory access ( NUMA) is a computer memory design used in multiprocessing, where the memory access time depends on the memory location relative to the processor.

#CACHE COHERENCE IN SHARED MEMORY MULTIPROCESSOR FREE#

That's fine on x86 acquire is free anyway.Computer memory design used in multiprocessing The motherboard of an HP Z820 workstation with two CPU sockets, each with their own set of eight DIMM slots surrounding the socket. Or really only mo_consume would be needed for using a pointer, but compilers strengthen that to mo_acquire. (Use mo_acquire on an atomic load of the pointer to avoid compile-time reordering, or runtime reordering on non-x86, with stuff you access later using that pointer. Or just spin on loading the pointer until you see a non-NULL value. You need some kind of data-ready flag which p2 checks with std::memory_order_acquire before reading the pointer. If p1 and p2 both attach to the shared memory asynchronously, nothing stops p2 from reading before p1 writes.

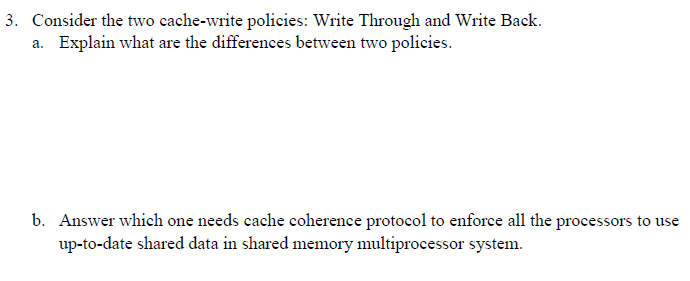

Coherence doesn't create synchronization on its own. (Because their copies of the line have all been invalidated so the core doing the modification can have exclusive ownership: MESI.)Īlmost certainly p2 is reading the shared memory before p1 writes it. Once a store commits to L1d cache in one core, no other core can load the old value. Lack of coherence is definitely not your bug. x86's memory-ordering model is program-order + a store-buffer with store forwarding.įormal model: A better x86 memory model: x86-TSO. X86 is cache-coherent even across multiple sockets (like all other real-world ISAs that you can run std::thread across). Is there a way to check what is the cache coherence protocol being used (this is for ubuntu 16.04 system) ?.I want to know what are the cache coherence protocols being followed in case of two cores (both cores on the same physical processor) having their private l1/l2 cache while sharing a common l3 cache. In case of shared bus/memory architecture, different processors (on different sockets) usually follow bus snooping protocols for cache coherence.

How can the above situation arise (p2 seeing a null value of ptr), though some form of cache coherency protocol would have been used ?.

I have the following questions related to this : Facing a crash in p2 as it sees the ptr as 'NULL' initially (though after some time, possibly because of cache coherence the correct value of ptr is seen by p2). Process p1 initializes the ptr & p2 is supposed to simply use it.

Both p1 & p2 use a pointer ptr (ptr being in shared memory). Both of these cores have the different L1 & L2 cache while sharing the common 元 cache. Having two processes p1 & p2 each running on different cores say c1 & c2 (both cores are on the same physical processor).

0 kommentar(er)

0 kommentar(er)